The End of AI Slop: Why Multi-Layer Validation is the New Marketing Standard

The credibility cost of “AI slop” in marketing

Brand damage rarely happens as a single catastrophe. It accumulates through small inconsistencies across pages, emails, and ads. Consistent brands can realize 20–33% revenue lifts. Yet most teams still publish misaligned copy when velocity outruns validation. That’s not just aesthetic. Trust and pricing power erode when your message shifts by channel.

How brand inconsistency erodes revenue

- Revenue Impact: Studies synthesizing brand-consistency ROI report 23–33% revenue gains when brands keep messaging and presentation aligned. Even modest deltas matter at scale.

- The Reality: 81% of companies deal with off-brand content. This means the average stack lacks reliable guardrails.

Is Google penalizing AI content or low quality?

Google’s guidance rewards helpful, people-first content regardless of authorship. In practice, low-effort automation gets de-emphasized while experience, expertise, and trust win. Translation: the risk isn’t “AI.” It’s shipping unvalidated, unhelpful content.

Does consistent branding really increase revenue?

Yes. Multiple analyses tie consistency to double-digit revenue lifts (often 20–33%). Even if your category falls behind, the compounding effect across paid and organic channels makes consistency one of the most efficient ways to grow.

Why AI alone can’t guarantee quality (and what recent data shows)

Large models are powerful. But they still fabricate or over-generalize. Independent tracking shows hallucination rates ranging from sub-1% in best-case setups to ~8%+ across popular models. This is higher in domains like law and finance. That is catastrophic for claims and compliance.

Hallucination rates in 2024–2025: what’s realistic?

- The Numbers: Some models now report <1% hallucinations on general knowledge. Others exceed 15% depending on the task. Legal topics remain risk-prone (~6% even among top models).

- The Fix: RAG can reduce hallucinations by ~71% when implemented correctly. But retrieval alone doesn’t enforce strategy or voice. You still need layered validation.

Can RAG and guardrails eliminate errors?

They dramatically reduce them, but not to zero. Retrieval fixes factual grounding. It doesn’t check whether the claim advances your positioning or fits your approved language. Pair RAG with strategy validators and human sign-off for reliability at publish time.

Do AI detectors work for quality control?

Detector scores are unreliable across models and rewrites. Instead of asking “was AI used,” evaluate whether outputs are accurate, on-strategy, and useful. Adopt a checklist and human co-audit pattern rather than detector gating.

The four validation layers that change outcomes

Speed is useful. Standards are non-negotiable. Snappin’s system applies four sequential gates before you ever see a draft.

Layer 1: Strategic coherence check

- What it does: Verifies every claim maps to your approved positioning, ideal customer pains, and offer. It flags off-strategy angles (like chasing irrelevant features or audiences).

- Why it matters: “Helpful” content that contradicts your strategy still confuses buyers and weakens pricing power. This undermines the 20–33% revenue edge tied to consistency.

- Example catches: (1) Pros/cons sections that unintentionally favor a competitor’s model. (2) Calls to action optimized for awareness on a conversion page. (3) Claims that don’t tie back to your core value proposition.

Layer 2: Brand voice alignment

- What it does: Enforces tone, lexicon, and narrative patterns from your Brand Blueprint. Blocks generic phrasing and maintains Visionary Leader confidence.

- Why it matters: Users prefer clear, authoritative answers but penalize perceived “AI-ness” when disclosed. Voice control preserves trust.

- Example catches: (1) Passive, hedged language that dilutes authority. (2) Mixed archetypes (Caretaker in social, Ruler on web). (3) Over-casual idioms that clash with premium positioning.

Layer 3: Quality threshold filtering

- What it does: Scores drafts for factual grounding, structure, and evidence density (stats every ~150–200 words with sources). Rejects pieces lacking citations or clear next steps.

- Why it matters: AI Overviews and People Also Ask increasingly answer directly. Only citation-ready, intent-complete passages earn visibility.

- Example catches: (1) Uncited market stats. (2) Outdated references. (3) Vague recommendations without success metrics.

Layer 4: Human judgment integration

- What it does: A strategist reviews high-impact sections, runs a co-audit for risky claims, and approves narrative tradeoffs.

- Why it matters: Human-in-the-loop remains essential where small errors have large consequences.

- Example catches: (1) Misinterpreted competitor feature. (2) Risky legal phrasing. (3) Cultural tone misreads in global campaigns.

What each layer catches: concrete examples

- Strategic coherence: A pricing page draft recommended “freemium” messaging for an enterprise-only offer. The layer flagged the misalignment and prevented a lost conversion.

- Brand voice: An email sequence used playful slang that clashed with executive buyers. The voice validator rewrote with decisive, evidence-led cadence consistent with Visionary positioning.

- Quality threshold: A blog post cited a 2019 stat without context. The filter required a 2024–2025 corroboration or removal, adding newer data on search prevalence and hallucination improvements.

- Human judgment: A case study implied compliance guarantees. The strategist edited to “supports compliance workflows,” avoiding over-promise risk.

Operationalizing quality: workflows, metrics, and governance

Use a publish checklist and acceptance criteria so teams move fast without gambling brand equity.

The Workflow:

- Generate with retrieval and brand blueprint prompts.

- Run strategy coherence validator (block if off-target).

- Run voice validator (tone/lexicon diffs vs. approved style).

- Run quality threshold (facts, citations, structure).

- Human co-audit on high-risk claims and calls to action.

- Ship with versioned sources and owners.

KPIs to track:

- Draft rejection rate by layer (aim: most rejections happen before human review).

- Fact-error rate at editorial QA (trend toward near-zero).

- “Intent completeness” score for target queries.

- Consistency score across channels (voice and CTA alignment).

People also ask: How do I set up human-in-the-loop review efficiently?

Adopt tiered review. Reserve strategist time for high-impact assets (home, pricing, key funnels). Use co-audit tools to surface risky claims automatically, then approve or revise in one pass. This retains speed while keeping error rates low where they matter.

Answer Engine Optimization (AEO): direct answers need validated facts

AI Overviews and PAA are increasingly AI-generated. Your best shot at visibility is providing concise, citation-ready answers that fully satisfy intent. Recent analyses show 12–38% of PAA answers are now AI-generated, with the share rising in 2025. Weak, incomplete pages get bypassed.

PAA and AI Overviews are growing: what it means for you

When Google fills answers itself, it’s signaling gaps in publisher content. Build answer blocks with clean structure, up-to-date citations, and explicit takeaways. This earns citations from AI systems and sustains visibility in a click-light future.

AEO checklist: making your answers citation-ready

- Lead with a 40–60 word direct answer that a model can quote.

- Include corroborated, recent stats and name the source in-line.

- Use scannable H2/H3s and question-style headers matching intent.

- Keep brand voice consistent across channels to preserve trust and pricing power.

When to book a Brand Blueprint demo (and what you’ll see)

If you’ve shipped anything that felt “close but off,” it’s time. In our 30-minute session, you’ll see:

- Your messaging mapped to customer pains and value pillars (Layer 1).

- Live voice diffs showing where drafts drift and how we correct them (Layer 2).

- Automated evidence checks that block weak sourcing and outdated stats (Layer 3).

- A strategist’s co-audit on your highest-risk page with recommendations (Layer 4).

Bonus: We show how RAG plus validators reduce factual error risk while preserving your story. You can scale content without sacrificing credibility.

Conclusion

Quality isn’t a final proofread. It’s a system. Most AI content fails because it isn’t validated for strategy, brand voice, or evidence before it goes live. A multi-layer approach changes that, converting speed into trust and revenue. Next steps: 1) Adopt the four validation layers on your top three revenue pages. 2) Add an AEO-ready answer block with fresh citations to each. 3) Track rejection and error rates by layer for 30 days to baseline improvement. Ready to see it in action on your brand? Book a Brand Blueprint demo and watch the validators catch what generic tools miss. Consistency and correctness are the new moat. You can operationalize both today.

Snappin Team

Strategy-first marketing insights from the team building Snappin — the AI Marketing Copilot that combines strategy, content creation, and scheduling in one platform.

Try Snappin freeContinue Reading

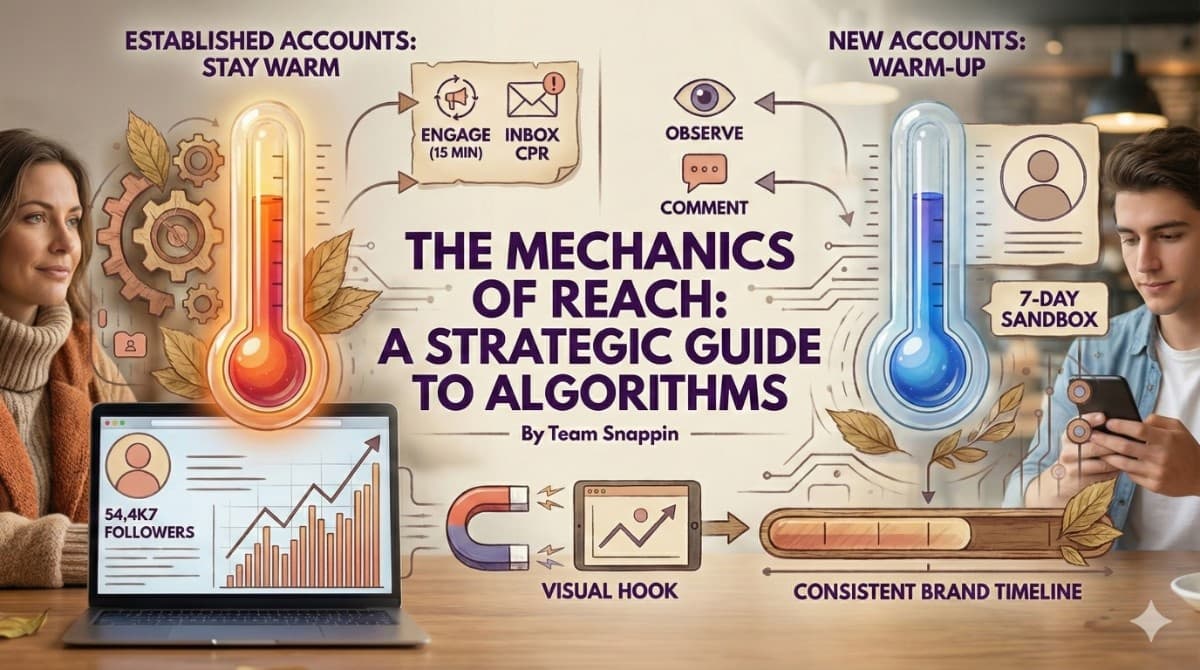

The Mechanics of Reach: A Strategic Guide to Social Media Algorithms

If you want consistent reach, stop looking for "viral hacks." Building a real brand on social media isn't about winning a lottery; it’s about understanding how the platforms actually work.

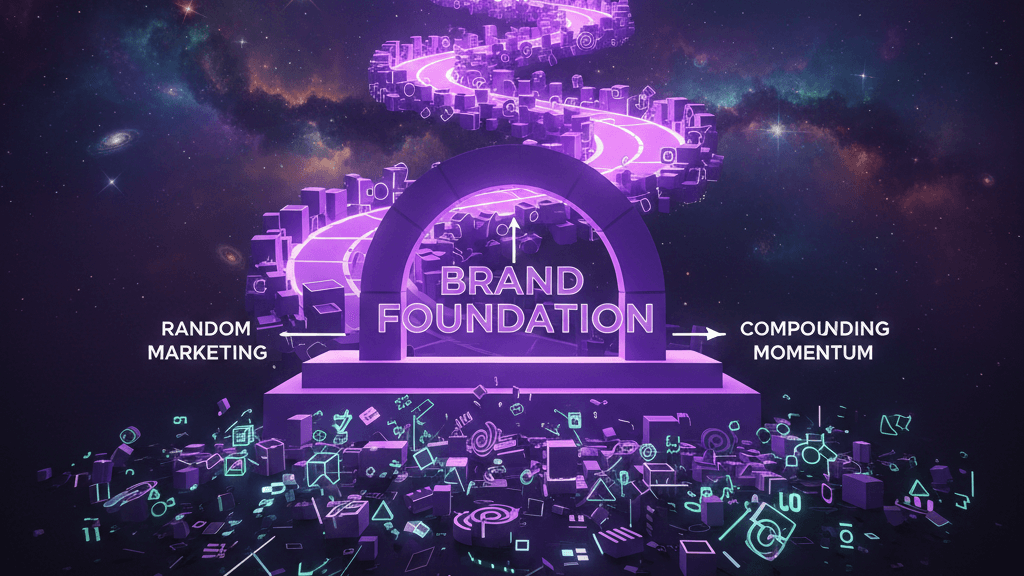

How Brand Foundation Transforms Random Marketing Into Compounding Momentum

You're posting on social media. Running ads. Sending emails. Attending events. Each activity feels productive in isolation, but six months later, you look back and see... what, exactly? More followers who don't convert. Campaign results that don't repeat. Traction that evaporates the moment you stop

Strategic Marketing Foundation: Why Strategy Must Precede Content Velocity

A strategic marketing foundation (clear positioning, competitive differentiation, and messaging architecture) multiplies ROI and prevents the diminishing returns of volume-first content. If you publish before you position, you pay twice. Once in wasted budget, then again to fix perception. This guid